The AI security landscape is moving fast. Just a few weeks ago, we discussed the “Triad” of open-source tools—Promptfoo, Strix, and CAI—that cover the spectrum from prompt engineering to infrastructure red teaming.

But the ecosystem has evolved. As enterprises move from simple chatbots to complex, autonomous agents, a new requirement has emerged: Continuous, Compliance-Ready Red Teaming.

Enter Giskard, a French powerhouse that bridges the gap between developer-friendly testing and enterprise-grade compliance. Today, we are updating our definitive guide to include Giskard, transforming our Triad into a Quartet. Here is how these four tools compare and why Giskard might be the missing piece in your LLM Ops pipeline.

1. Giskard: The Continuous Red Teaming Platform

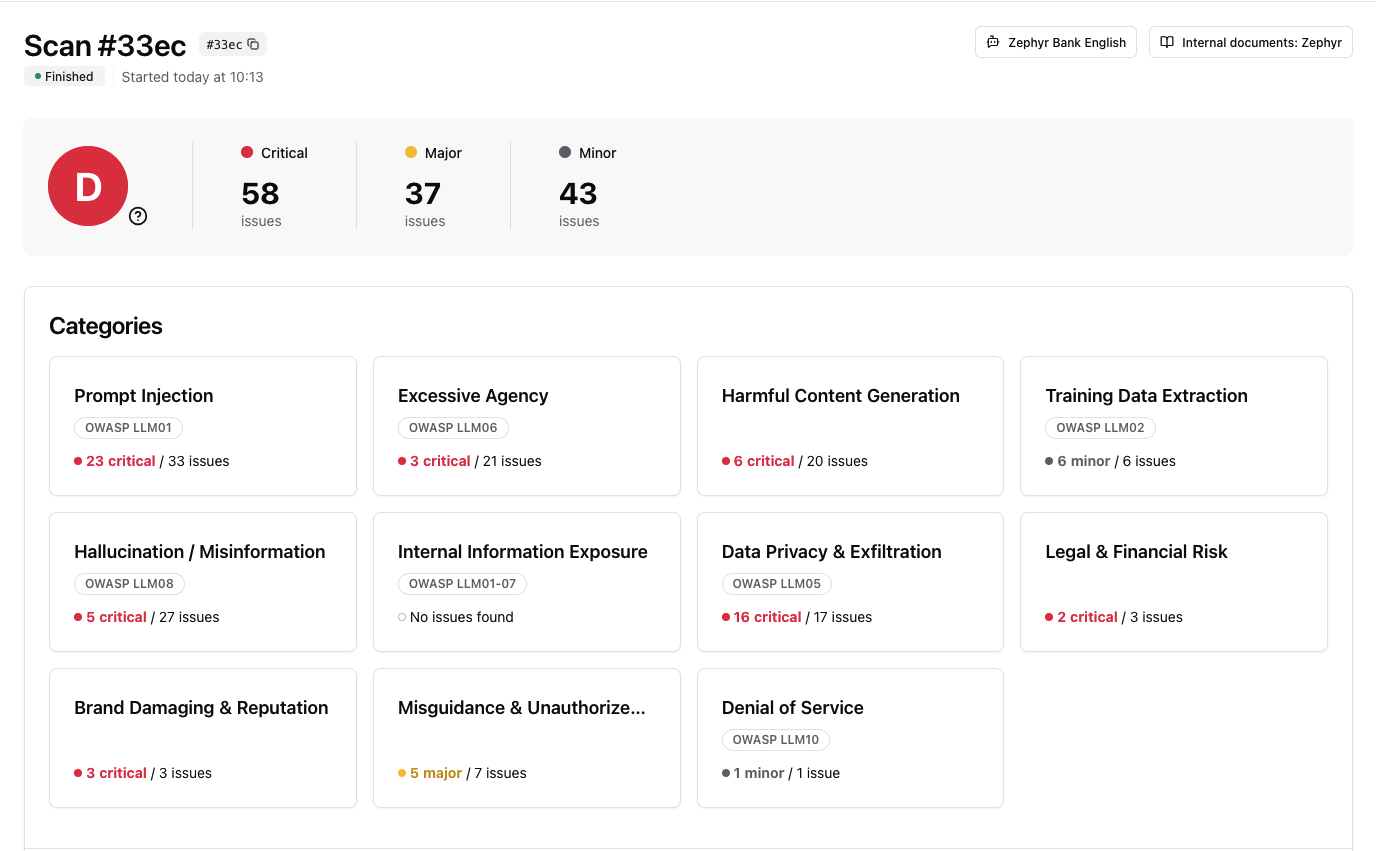

Core Philosophy: “Continuous Red Teaming aligned with Industry Standards (OWASP, NIST, EU AI Act).”

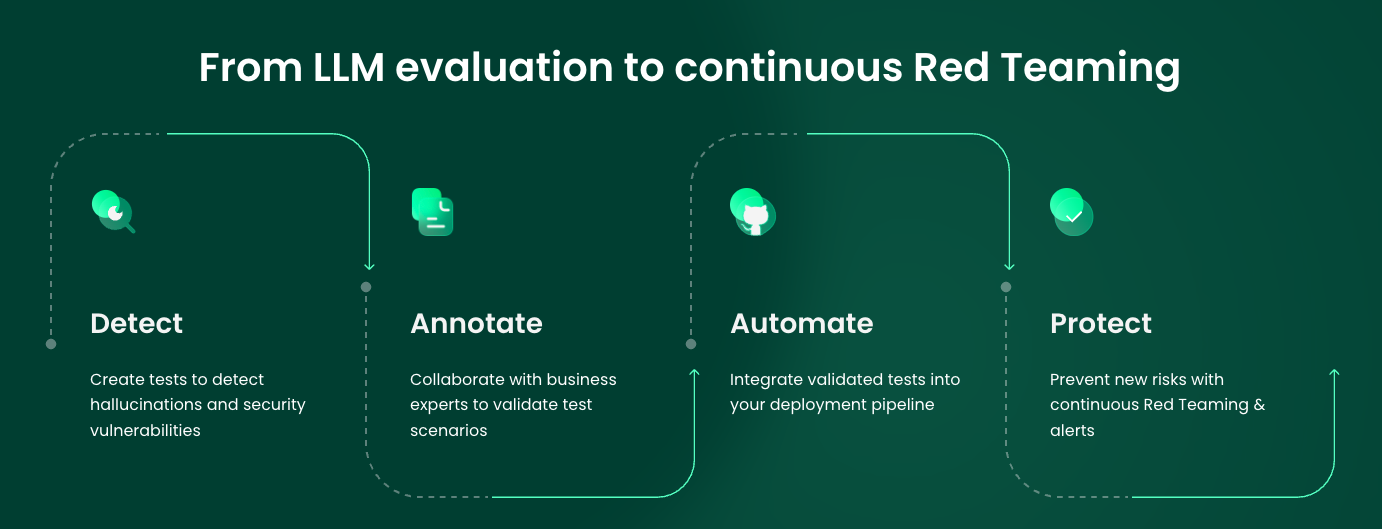

What it is: Giskard is an open-source testing framework and enterprise platform dedicated to the continuous evaluation of LLM agents. While other tools focus on “breaking” the model once, Giskard focuses on monitoring and improving the model throughout its lifecycle, with a heavy emphasis on RAG (Retrieval-Augmented Generation) and compliance.

Key Features (Based on the latest documentation):

- RAG Evaluation Toolkit (RAGET): Unlike generic testers, Giskard generates specific test sets to evaluate the Retriever, Generator, and Router components of your RAG system independently.

- Dynamic & Context-Aware Attacks: It uses an “AI Red Teamer” that doesn’t just throw static prompts; it interacts with your agent, adapting its strategy based on the agent’s responses to find edge cases.

- Compliance-First: It comes pre-loaded with detectors for OWASP Top 10 for LLM (LLM01-LLM10), mapping findings directly to NIST and MITRE ATLAS standards.

- Golden Dataset Generation: It automates the tedious process of creating “Golden Datasets” (ground truth) to prevent regressions over time.

When to use it: Use Giskard when you are building enterprise RAG applications that require strict adherence to safety standards. If you need to prove to an auditor (or your CISO) that your agent won’t hallucinate or leak PII, Giskard provides the reporting and continuous monitoring layer that developer-centric tools often lack.

2. The Updated Comparison Matrix

With Giskard in the mix, here is how the landscape shifts:

| Feature | Giskard | Promptfoo | Strix | CAI (Cybersecurity AI) |

|---|---|---|---|---|

| Primary Focus | RAG Evaluation & Compliance | LLM Output Quality & Regressions | Web App & API Vulnerabilities | Infrastructure & Deep Pentesting |

| Methodology | Continuous Red Teaming & Golden Datasets | Matrix Testing (Prompt vs Model) | Agentic Probing & Exploitation | Multi-Agent Orchestration |

| Key Strength | Context-Aware Attacks & Reporting | Developer Experience (DX) & CI/CD | Proof-of-Concept (PoC) Generation | Uncensored Models (alias1) |

| Target Audience | Enterprise AI Teams & QA | Developers & Prompt Engineers | AppSec Engineers | Red Teamers |

| Origin | 🇫🇷 France (EU AI Act Ready) | 🇺🇸 USA (Open Source) | Open Source Community | 🇪🇺 EU |

3. Revised Use Case: Securing “FinBot” with the Full Quartet

Let’s revisit our SecureBank “FinBot” scenario. How does Giskard change the architecture? It adds the critical Evaluation & Compliance layer.

Phase 1: The Guardrails (Promptfoo)

Promptfoo is still your first line of defense in the CI/CD pipeline.

- Task: Quick, cheap regression testing on commit. “Does the model still refuse to generate SQL code?”

Phase 2: The Deep Evaluation (Giskard) [NEW]

Before staging, the model goes through Giskard.

- Task: Giskard scans the RAG pipeline. It generates dynamic questions based on the bank’s internal policy documents (the Knowledge Base).

- Result: Giskard detects a “Hallucination” where the Retriever fetched the wrong document regarding loan interest rates, causing the Generator to give false financial advice. It also flags a potential “Sensitive Information Disclosure” (OWASP LLM06) that Promptfoo missed because it required a multi-turn conversation to trigger.

Phase 3: The Application Vulnerability Check (Strix)

The API wrapper is deployed to Staging.

- Task: Strix attacks the API endpoints.

- Result: Strix confirms an IDOR vulnerability in the chat history endpoint, proving that User A can read User B’s financial advice history.

Phase 4: The Infrastructure Siege (CAI)

The Red Team validates the production environment.

- Task: CAI orchestrates a complex attack, attempting to pivot from a compromised container to the core banking ledger.

Conclusion: Coverage is Key

The addition of Giskard allows organizations to cover the “Semantic” and “Compliance” gaps that pure security tools might miss.

- Promptfoo checks the Prompt.

- Giskard checks the Logic and Knowledge (RAG).

- Strix checks the Application Wrapper.

- CAI checks the Infrastructure.

By integrating these four, you aren’t just finding bugs; you are building an AI system that is secure, reliable, and compliant by design.

To further enhance your AI security strategy and implement this architecture, contact me on LinkedIn Profile or contact@ogw.fr

Frequently Asked Questions (FAQ)

Why do I need Giskard if I already use Promptfoo?

Promptfoo is excellent for deterministic prompt testing (Input/Output). Giskard excels at **RAG Evaluation**. It understands the context of your documents (Knowledge Base), checks if the retrieval step failed, and can simulate multi-turn conversations to find subtle hallucinations or jailbreaks that a single-turn prompt test would miss.

Is Giskard strictly for Python?

Giskard is a Python-based library (`pip install giskard`), making it ideal for data science workflows. However, it integrates with any LLM accessible via API (OpenAI, Mistral, etc.) and works seamlessly with frameworks like LangChain.

Which tool helps with the EU AI Act?

**Giskard** is specifically designed with the EU AI Act in mind. Its reporting features are tailored to generate the documentation and risk assessments required by regulators for high-risk AI systems.

Can I run Giskard on-premise?

Yes. Giskard offers an on-premise version of their Hub, allowing you to evaluate models and keep sensitive data entirely within your own VPC, which is critical for financial and healthcare use cases.

Resources

- Giskard GitHub: Open Source Repository

- Phare Benchmark: Giskard’s LLM Safety Leaderboard

- OWASP Top 10 for LLM: The standard against which Giskard automates testing.