Published

- 10 min read

From Reactive to Autonomous: A Guide to the AI Maturity Model for Cybersecurity

In the modern Security Operations Center (SOC), “Artificial Intelligence” has become the buzzword of the decade. Yet, for many CISOs and security leaders, the reality of AI often feels disconnected from the marketing brochures. Teams are still drowning in alerts, struggling with burnout, and relying on static playbooks that fail to keep pace with machine-speed attacks.

The critical question is no longer if you should use AI, but how mature your implementation is. Are you using AI merely to summarize emails, or is it actively defending your perimeter?

Darktrace has introduced the AI Maturity Model for Cybersecurity, a definitive framework designed to cut through the hype. It offers organizations a structured roadmap to assess their current capabilities and provides a clear path to evolving from manual, labor-intensive operations to a state of autonomous, AI-delegated resilience.

This guide explores the evolution of the security organization in the Era of AI.

The Three Dimensions of Maturity

Before climbing the ladder, it is vital to understand that AI maturity isn’t just about software. The Darktrace model evaluates maturity across three interconnected dimensions:

- Outcomes: Moving from reactive chaos to proactive resilience.

- People: Shifting the human role from “doing the work” to “overseeing the strategy.”

- Technology: Evolving from basic automation scripts to adaptive, autonomous agents.

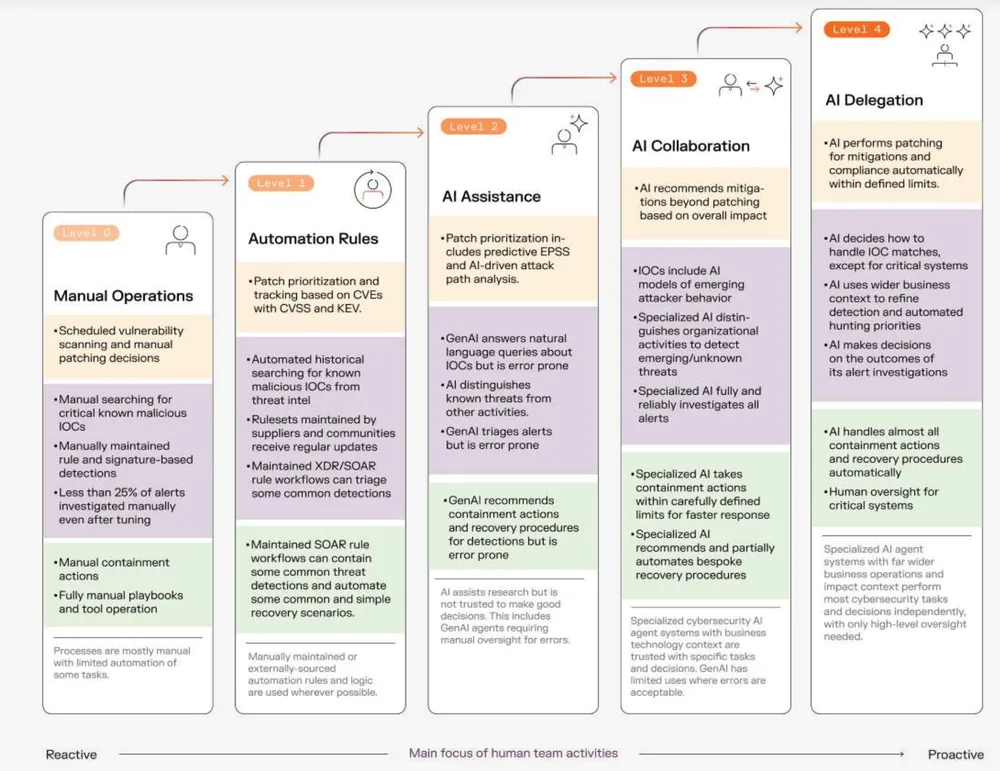

The 5 Levels of AI Maturity

Level 0: Manual Operations (The Bottleneck)

At this foundational level, security is entirely dependent on human bandwidth.

- The Reality: Analysts manually triage alerts, often leaving the vast majority (over 75%) uninvestigated due to time constraints. Vulnerability scanning is periodic, and patching is a scramble based on generic severity scores rather than actual risk.

- The Risk: High burnout, slow mean-time-to-respond (MTTR), and a reactive posture where defenders are always steps behind the attacker.

Level 1: Automation Rules (The Scripted Fix)

Organizations begin to adopt SOAR (Security Orchestration, Automation, and Response) tools and XDR platforms.

- The Reality: We automate the “known knowns.” If an attack matches a specific signature, a rule triggers a pre-defined action.

- The Limitation: While this reduces some manual load, it creates a new burden: Engineering fatigue. Humans must constantly write, tune, and update rules to keep up with changing attack vectors. If an attack doesn’t match a rule, it slips through.

Level 2: AI Assistance (The Copilot)

This is where Generative AI (GenAI) and Large Language Models (LLMs) typically enter the picture.

- The Reality: AI is used to summarize complex alerts, suggest remediation steps, or translate natural language queries into database searches. It lowers the skill barrier for junior analysts.

- The Warning: As the model notes, GenAI is prone to errors and hallucinations. While it speeds up triage, it requires heavy human oversight. The AI is a helpful assistant, but it cannot be trusted to make critical decisions.

Level 3: AI Collaboration (The Partner)

This represents a significant leap. Here, specialized AI agents—capable of understanding specific business contexts—work alongside humans.

- The Reality: The AI doesn’t just summarize; it performs full investigations. It can distinguish between a malicious anomaly and a weird (but benign) business process. It recommends containment actions that are tailored to the specific asset, not just a generic “block IP” command.

- The Shift: Humans start trusting the AI to handle complex tasks within defined boundaries. The AI prioritizes risks based on real-world attack paths, not just CVSS scores.

Level 4: AI Delegation (The Autonomous Defender)

The pinnacle of the maturity model.

- The Reality: AI systems operate independently across the security lifecycle—detection, investigation, containment, and recovery—at machine speed.

- The Human Role: Humans are no longer “operators”; they are governors. They provide strategic oversight and only intervene in the most critical, high-impact scenarios.

- The Outcome: The SOC scales effortlessly. Incident response is instantaneous, and the organization achieves true cyber resilience.

Evolving Your Capabilities: A Domain-by-Domain Analysis

How does this maturity look in practice across different security domains?

1. Evolution of the SOC (Detection & Investigation)

- Early Maturity: The SOC is noisy. Analysts are hunting for known Indicators of Compromise (IOCs) manually. False positives are rampant.

- High Maturity: AI shifts from signature-based detection to behavioral analysis. It understands “normal” for your organization and detects unknown threats (zero-days, insider threats) without requiring prior knowledge of the attack vector. Investigations run autonomously 24/7, presenting analysts with a complete incident narrative rather than raw data.

2. Evolution of Risk Management

- Early Maturity: Risk is managed via spreadsheets and scheduled scans. You patch vulnerabilities based on generic severity scores, often wasting time on non-critical assets.

- High Maturity: AI evaluates how vulnerabilities interact across your specific hybrid environment. It prioritizes fixing a “Medium” severity bug that is on a critical attack path over a “Critical” bug on an isolated server. Eventually, the AI performs the mitigation automatically within safe limits.

3. Evolution of Containment & Recovery

- Early Maturity: Static playbooks. If ransomware hits, the response is often a panic button that disrupts business operations.

- High Maturity: Adaptive Response. The AI takes surgical action—for example, interrupting only the specific malicious connection on an infected laptop while allowing the user to continue working on other tasks. Recovery is automated, restoring systems to their pre-infection state instantly.

How to Climb the Ladder: Implementation Steps

Moving from Level 1 to Level 4 isn’t about firing your team and installing a “magic box.” It requires a deliberate strategy:

- Conduct a Self-Assessment: Use the table below to honestly benchmark your current tools. Are you relying on static rules (L1) or adaptive learning (L3)?

- Define Guardrails for Autonomy: To move from Assistance (L2) to Collaboration (L3), define specific scenarios where you trust the AI to act. Start with low-risk actions (e.g., suspending a non-critical user account) and expand as trust grows.

- Invest in “Business-Aware” AI: Generic LLMs (L2) don’t know your business. To reach L3/L4, invest in AI that learns your specific network topology, user behavior, and asset criticality (Unsupervised Machine Learning).

- Shift Talent Focus: As you automate triage and response, retrain your L1 analysts to become L3 threat hunters and AI governance specialists.

Maturity Benchmark Table

Use this reference to identify where your organization stands and where it needs to go next.

| Capability | Level 0: Manual | Level 1: Automation Rules | Level 2: AI Assistance | Level 3: AI Collaboration | Level 4: AI Delegation |

|---|---|---|---|---|---|

| Investigation | < 25% of alerts reviewed; manual triage. | SOAR handles common alerts; rules require maintenance. | LLMs summarize alerts; prone to hallucinations. | AI fully investigates all alerts with high precision. | AI autonomously investigates & resolves; humans review only critical cases. |

| Threat Detection | Signatures & IOCs; high false positives. | Technique-based rules; limited scope. | AI improves accuracy but is still rule-bound. | AI detects unknown/novel threats via behavioral analysis. | AI continuously adapts detection logic autonomously. |

| Response | Manual actions; slow reaction time. | Static automated responses (e.g., block IP). | AI recommends actions; human must execute. | AI executes tailored containment within limits. | AI handles containment & recovery autonomously. |

| Risk Mgmt | Periodic scanning; manual patching. | CVSS-based prioritization; patch tracking. | Predictive attack path analysis. | AI mitigates risk beyond just patching (config changes). | AI performs mitigations automatically within defined limits. |

| Human Role | Task execution (Data Janitor). | Rule creation & SOAR maintenance. | Oversight of AI suggestions; fact-checking. | Strategic oversight; handling high-risk decisions. | Governance; setting policy; critical decision-making. |

Self-Assessment Checklist: Benchmarking Your Tools

Knowing the levels is useful, but knowing where you are is critical. Use this self-assessment rubric to evaluate your current tools and processes. Identify which column best describes your current operations to determine your maturity level.

1. Risk Management (Exposure & Vulnerability)

Are risks prioritized based on exploitability and business impact?

| L0: Manual | L1: Automation Rules | L2: AI Assistance | L3: AI Collaboration | L4: AI Delegation |

|---|---|---|---|---|

| Scheduled vulnerability scanning. | Patch prioritization based on CVEs, CVSS scores, and KEV (Known Exploited Vulnerabilities). | Prioritization includes predictive EPSS and AI-driven attack path analysis. | AI recommends mitigations beyond just patching based on overall business impact. | AI performs patching for mitigations automatically within defined limits. |

| Manual patching decisions. | Automated tracking of CVEs. |

2. Threat Hunting

Is hunting proactive and AI-assisted?

| L0: Manual | L1: Automation Rules | L2: AI Assistance | L3: AI Collaboration | L4: AI Delegation |

|---|---|---|---|---|

| Critical known malicious IOCs searched manually. | Automated historical searching for known malicious IOCs from threat intel. | GenAI answers natural language queries (but is error-prone). | IOCs include AI models of emerging attacker behavior. | AI decides how to handle IOC matches autonomously (except for critical systems). |

| Unknown threats rarely investigated. | Unknown threats occasionally investigated. | Investigation prompted by AI suggestions. | AI finds unknown threats based on Organization Behavior. | Unknown threats investigated continuously by AI. |

3. Threat Detection

Are detections adaptive and context-aware?

| L0: Manual | L1: Automation Rules | L2: AI Assistance | L3: AI Collaboration | L4: AI Delegation |

|---|---|---|---|---|

| Static rules. | Technique-based rules (XDR/SOAR). | AI distinguishes known threats from other activities. | AI distinguishes organizational activities to detect emerging/unknown threats. | AI uses wider business context to refine detection and automate priorities. |

| Detection engineering is fully manual and siloed. | Standard/community rulesets used. | Maintenance load reduced; some rules replaced by AI classifiers. | Significant reduction in maintenance; AI distinguishes normal vs. abnormal. | Detection engineering is mainly left to AI systems; humans intervene only in high-importance situations. |

4. Alert Investigation

Is triage automated or AI-assisted?

| L0: Manual | L1: Automation Rules | L2: AI Assistance | L3: AI Collaboration | L4: AI Delegation |

|---|---|---|---|---|

| Manual triage. | SOAR workflows triage common alerts. | GenAI workflows triage alerts but are error-prone. | Specialized AI fully investigates alerts (not error-prone). | AI makes decisions on the outcomes of alert investigations. |

| No context enrichment. | Basic enrichment. | Investigations enriched with deep context. |

5. Initial Containment & Recovery

Can containment occur autonomously? Is speed sufficient?

| L0: Manual | L1: Automation Rules | L2: AI Assistance | L3: AI Collaboration | L4: AI Delegation |

|---|---|---|---|---|

| No autonomous containment. | SOAR workflows for known cases. | GenAI recommends actions (error-prone). | AI executes actions within limits. | AI contains threats autonomously. |

| Always long delays. | Short delays for known threats. | Short delays for common scenarios. | Near real-time even for novel scenarios. | Near real-time and aware of wider business context. |

| Manual playbooks for recovery. | SOAR workflows for common recovery. | GenAI recommends recovery procedures (error-prone). | Specialized AI recommends bespoke recovery. | AI executes recovery autonomously within defined limits. |

Conclusion

The journey through the AI Maturity Model is about more than just efficiency; it is about survival. In an era where attackers utilize AI to launch sophisticated campaigns, a manual or rule-based defense is mathematically destined to fail.

By aiming for AI Collaboration (L3) and ultimately Delegation (L4), organizations can reclaim the advantage. They can move from a posture of constant anxiety to one of confidence, knowing their security is running at machine speed, 24/7.

To further enhance your AI security strategy, contact me on LinkedIn Profile or contact@ogw.fr

Frequently Asked Questions (FAQ)

What is the primary goal of the AI Maturity Model?

The model aims to guide organizations from manual, reactive security operations to autonomous, AI-delegated resilience, helping them assess current capabilities and plan for advanced AI integration.

What distinguishes Level 2 (AI Assistance) from Level 3 (AI Collaboration)?

Level 2 uses AI (like GenAI) to summarize and suggest, requiring heavy human oversight. Level 3 introduces specialized agents that perform full investigations and recommend tailored actions, with humans shifting to strategic oversight.

Does reaching Level 4 mean replacing human analysts?

No. At Level 4, the human role shifts from "operator" to "governor." Humans provide strategic oversight and intervene only in the most critical, high-impact scenarios while AI handles detection, investigation, and containment.

Why are "Automation Rules" (Level 1) considered a limitation?

Automation rules only address "known knowns" and require constant manual updating (engineering fatigue). If an attack doesn't match a specific rule, it slips through, leaving the organization vulnerable to novel threats.

How does AI improve risk management in this model?

AI evolves risk management from periodic scanning and generic scoring to predictive, continuous analysis. It prioritizes vulnerabilities based on real-world attack paths and business context, eventually performing automated mitigations.